For January, the Australian dollar was the key story. At an index level, Australian stocks rose 1.5%, but international stocks (largely due to the Australian dollar) fell 2.5%. Our funds were well-positioned for the change. Our core international fund bucked the trend to rise 1.9% for the month. Not holding as many US stocks helped, and a number of core stocks outperformed significantly, which offset losses elsewhere.

January has seen some of the heat come out of prices, but the key story leading into reporting season is the strength of company earnings underpinning the market. While there are economic problems in many countries, they are mostly affecting consumers and workers – companies are doing pretty well.

Are software stocks having a “Kodak” moment?

Software stocks have been hammered on the back of AI fears. In the last couple of weeks, losses of 15–20% have been common across the sector, with the broader software and services cohort down about 30% from recent highs before a small bounce. The sell-off looks indiscriminate. Babies have been thrown out with the bathwater as investors dump the entire bucket labelled “software.”

Thirty years ago, Kodak looked like textbook quality—cash, growth, dividends—until digital photography flipped the economics. Are software stocks having a Kodak moment?

Time to reach for a checklist: what does this company actually do, who pays for it, and how will AI agents shift that equation?

Here’s my thesis in a sentence: AI agents are collapsing an entire layer of software work and integration friction at the same time that enterprise budgets are being reallocated toward AI infrastructure.

That double hit is compressing seat counts, pressuring “wrapper” software, and creating new winners deeper in the stack. It’s also opening up opportunities among quality franchises that have been sold off with the herd.

Why now: two forces hit at once

1) A step-change in coding agents

Early large language models were powerful but awkward in real developer workflows. You had to copy-paste between an IDE and a chatbot, install libraries yourself, and wrestle with version mismatches. Productivity gains were real but noisy.

Agents just leapt a level. You can now hand an AI agent your specs—problem, behaviour, constraints—and it writes code, runs it in a sandbox, installs missing libraries, fixes version conflicts, and iterates until it works.

Tools like Anthropic’s Claude Code crystallised the shift: less back-and-forth, more end-to-end execution. That is a visible break from “AI helps me type faster” to “AI builds, tests, and stitches systems without me babysitting every step.” Large firms are already reassessing development workflows. This is not a toy upgrade; it is a structural change to how software gets made.

2) Budgets are finite, and AI capex is massive

The latest reporting season underscored the scale of Big Tech’s AI buildout, with hundreds of billions earmarked for 2026 infrastructure. If a CIO has a $50 million IT budget and needs another $5 million for AI compute and platforms, something else gives. Fewer hires. Fewer licenses. Or both. I hear consistent anecdotes: lower hiring, attrition not backfilled, and a tilt toward generalists who can orchestrate AI rather than armies of narrow specialists. That translates into fewer seats for legacy tools over time.

The result: lower demand for per-seat licenses, especially in roles where AI can absorb repetitive tasks, and more dollars flowing to AI infrastructure and specialised data.

Where the impact will hit first

- Software development: Fewer juniors. You still need experts to design robust systems, but a 50-person team with 10 seniors and 40 juniors may become 6–7 seniors and 4–5 juniors. That cascades into lower license counts for IT backends and dev tools priced per user.

- Security, observability, and IT service management: Seat-based models face headwinds if headcounts fall. Think Datadog, CrowdStrike, Palo Alto Networks, ServiceNow and peers. Not all are equal—consumption-based models and platform breadth help—but the risk is real. The market’s sell-off reflects this Kodak moment fear: high-quality today can flip if the business model doesn’t adapt.

- Design and office productivity: Tools like Adobe and Canva thrive on demand for human-created content. If agents can generate on-brand images, podcast art, or video snippets on command, the number of human designers and the minutes spent in those tools can shrink. Excel faces a similar dynamic for routine tasks. If an agent can ingest data, create charts, and draft slides, many light-touch use cases get automated away. Complex modelling and specialist workflows remain, but the tail of casual usage is large.

- “All-in-one” platforms: Salesforce, HubSpot, monday.com and others have long charged a premium for orchestration—connect CRM, marketing, websites, and analytics under one roof, and save the pain of integrating best-of-breed tools. Agents make integration tractable. APIs have docs. An AI can read them, write glue code, maintain mappings, and keep systems in sync. The premium for “we integrate it for you” drops. For companies that monetise integration services and internal orchestration, that revenue is at risk unless they pivot to outcome-based value.

- Legal tech and research: Staffing pyramids flatten when agents handle first drafts and case pulls. A pyramid of one partner and five juniors can become one partner and two or three juniors. Fewer seats, weaker pricing power. RELX and Thomson Reuters still own prized datasets, but the wrapper—the UI, the integration, the training—is less valuable if switching costs fall.

The new economics of data and switching costs

For years, data platforms won with a simple playbook: invest heavily to curate high-quality data, sell access as a system of record, enjoy low marginal costs, and nudge prices up every year because clients were locked in. The lock-in came from bespoke integrations, API wiring, edge cases, and re-training costs.

Coding agents chip away at each piece of that lock-in. They read docs, port queries, map schemas, and maintain synchronisation. Switching is still painful, but less so. If you built your pricing power on integration friction, expect pressure. If your moat is unique, clean, and hard-to-replicate data, that moat just got more valuable. Enterprises will still pay for verified history, consistency, and provenance they can trust. The question is the wrapper: interfaces and adjacent services will commoditise.

The value is the dataset, not the old switching friction. APIs that deliver reliable, unique data retain pricing power. Anything that’s “me-too” faces a reset.

Watch the CPU comeback

One underappreciated implication of agents: they increase general-purpose compute demand. While GPUs dominate model training and many inference tasks, agents spawn tests, validations, orchestration scripts, and glue code that run better on CPUs. Remove the human copy-paste bottleneck, and agents will happily chase the wrong path for hours, burn cycles, and then correct. That’s more CPU load, more storage IO, and more network traffic. The winners here are the hyperscalers and the vendors that supply the boring but essential scaffolding—compute, networking, observability that aligns with usage, not seats.

How I’m underwriting software now: quality–value, with new definitions of quality

I use a quality–value framework, but I’m ruthless about whether “quality” is still quality in an agent-led world.

Quality:

– Durable earnings tied to non-commoditised moats (unique data, system of record status, mission-critical embed).

– High returns on invested capital, strong balance sheets, and low churn from real switching friction, not integration pain that agents can erase.

– Pricing power tied to outcomes or consumption, not headcount.

Value:

– Pay up for the best when moats are strengthening.

– Demand a deep discount for names whose moats rely on integration complexity or per-seat bloat.

– Let valuation swing while quality anchors decisions. Buy when a high-quality name moves from “expensive” to “reasonable” as price falls; trim as it stretches to the 80th–90th percentile.Beware the Kodak trap. Quality is not static. A software company can look optically cheap versus its own history right before the underlying demand changes for good.

Case studies I’m focusing on

– Microsoft: Windows is now a much smaller piece of revenue. If Windows license volumes halved, Azure and AI could offset the drag with single-digit growth. Office remains meaningful, but the center of gravity is Azure plus AI partnerships. Microsoft’s exposure to OpenAI matters. OpenAI led six to twelve months ago, but Gemini and Claude have caught up or pulled ahead in many benchmarks and agent orchestration use cases. If OpenAI regains the lead, Microsoft benefits. If not, Azure still wins on infra demand, but the application-layer premium could be lower than the market expects.

– Salesforce, HubSpot, Monday: I’m cutting the value of internal integration and orchestration. The defensible core is where they own the system of record and deliver measurable outcomes. If revenue depends on “we make the moving pieces work together,” I expect margin pressure and slower growth unless they shift to consumption/outcome pricing and offer deep agent-native automation that customers can’t easily replicate.

– Security/observability/service management: I favour usage-tied or data/network-effect moats over per-seat models. The more a product is priced on telemetry, events, or protected assets rather than people, the better. I’m cautious where valuation assumes headcount expansion that may not come back.

– Hyperscalers and the stack: Nvidia, Google, AWS/Amazon, and to a degree the CPU suppliers and networking names, still look like structural winners. Agents drive more tokens and more tests. That means more inference, more orchestration, more data movement. The math is messy, but the direction is clear.

How to separate babies from bathwater

– Map revenue to actual exposure. What percent is per-seat? What percent is integration services? What percent is consumption-based tied to data, events, or compute?

– Ask whether AI agents reduce time-to-value for switching. If yes, assume lower pricing power and higher churn over the next 12–24 months, even if it hasn’t shown up yet.- Identify unique data moats. Can a credible competitor replicate the dataset in under three years with low error rates? If no, pay attention.

– Stress-test a 20–40% seat reduction in your model. If the business falls apart, you were underwriting the old world.

– Look for outcome pricing. If the vendor can charge for faster closing rates, lower fraud losses, higher uptime, or real cost takeout, resilience improves.

My positioning today

– Lean selectively long in high-quality data platforms and true systems of record. I’ll pay a reasonable multiple for moats that get stronger as integration commoditises.

– Favour hyperscalers and parts of the compute/network stack that benefit from agent-driven load, including CPU-heavy workflows.

– Cautious on “wrapper” software and integration-heavy revenue unless management is already pivoting to agent-native products and outcome pricing.

– Underweight per-seat models tied to junior-heavy usage until valuations reflect structurally lower headcounts.

– Opportunistic in names sold off with the cohort where the “AI hurts them” story is superficial and core demand is stable or improving.

The market is treating “software” as a single trade. It isn’t. We’re moving up a new abstraction layer where you tell the machine what you want and it assembles the pieces. That shift crushes some models and supercharges others. If you separate the wrappers from the moats, the seats from the outcomes, the integration friction from the data that actually matters, this sell-off is an opportunity for some stocks, but a trap for others.

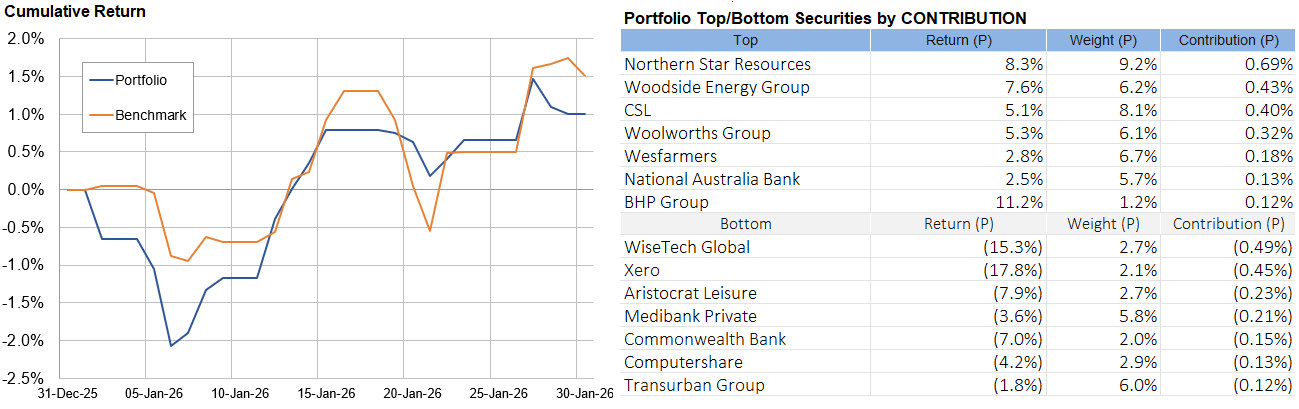

Asset allocation

We are a little underweight in shares overall, significantly underweight in Australian shares. We are market-weight bonds, with a little foreign cash:

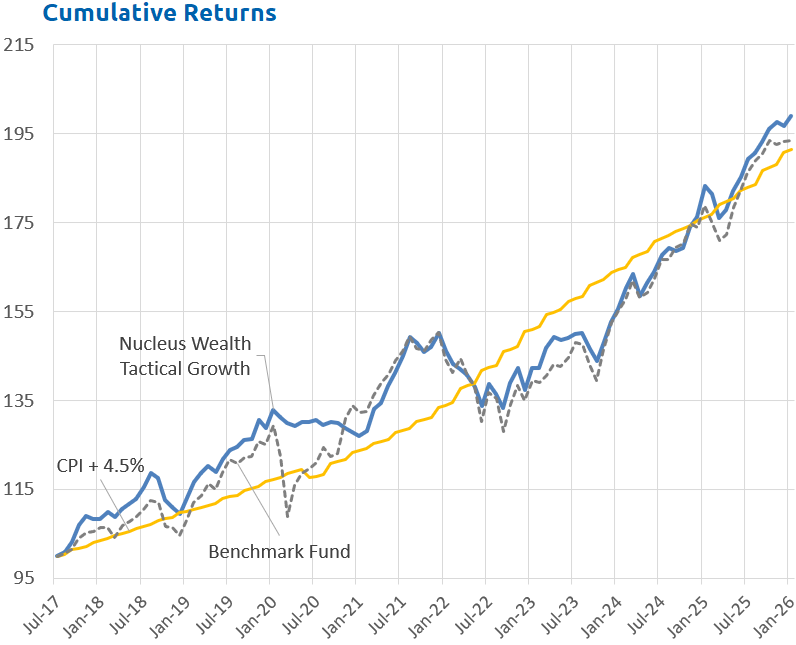

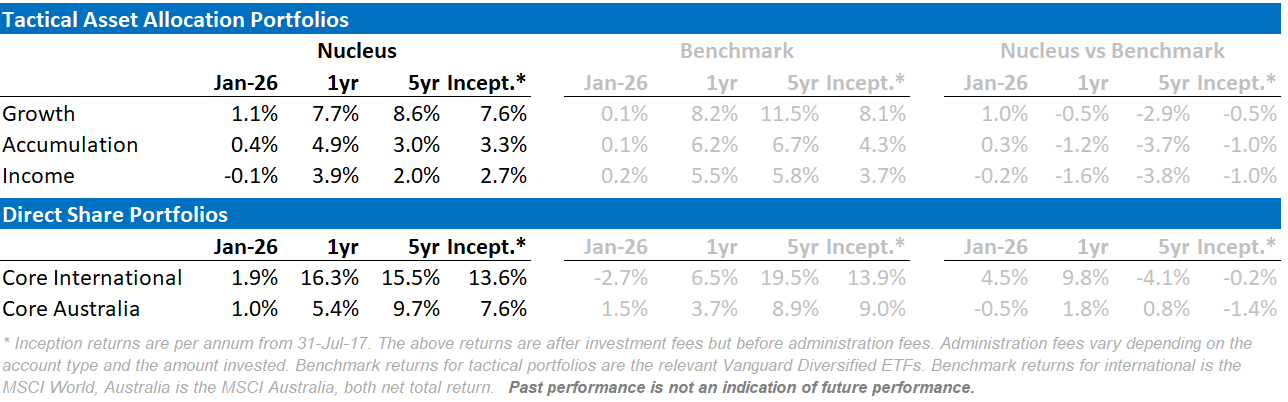

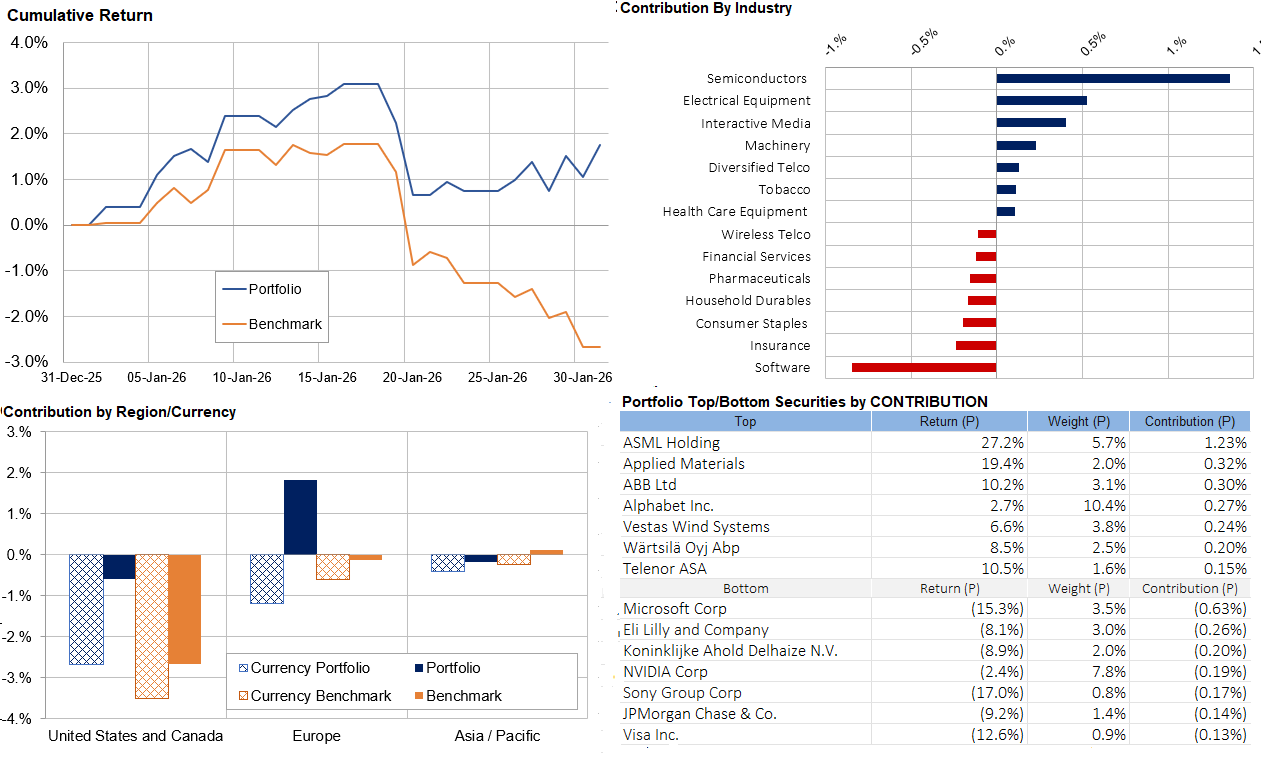

Performance Detail

Core International Performance

Our portfolio outperformed as the market melted down toward the end of the month, saved by low exposure to software stocks and the stellar performance of European semiconductor supplier ASML. Currency continued to be an overall detractor.

Core Australia Performance

Over January, Australian stocks recovered to post gains on the back of resource stock strength, offsetting Technology weakness.

Damien Klassen is Chief Investment Officer at the Macrobusiness Fund, which is powered by Nucleus Wealth.

Follow @DamienKlassen on X(Twitter) or Linked In

The information on this blog contains general information and does not take into account your personal objectives, financial situation or needs. Past performance is not an indication of future performance. Damien Klassen is an Authorised Representative of Nucleus Advice Pty Limited, Australian Financial Services Licensee 515796. And Nucleus Wealth is a Corporate Authorised Representative of Nucleus Advice Pty Ltd.